Over the past year, our team at the Processing Division,GEOPIC has been tirelessly working on automating "Stacking Velocity Analysis" (SVA), a crucial component of the pre-stack processing workflow. And we have made a significant breakthrough !

Traditionally, SVA is a time-intensive task, requiring approximately one month of manual effort for an average-sized seismic processing project. Processing Analysts meticulously select time-velocity functions for CMP gathers in a regular grid. Our innovative tool, which integrates Deep Learning with physics-based constraints, reduces this effort to mere hours.

Here's an excerpt from the Executive Summary of the project.

In FY 2023-24, the Processing Division embarked on a transformative project titled Automatic Stacking Velocity Analysis Using ML to revolutionize seismic processing through the integration of cutting-edge AI and machine learning technologies. This initiative aimed to significantly reduce project turnaround times and enhance overall operational efficiency, adding substantial value by generating new intellectual property and incorporating advanced technologies into seismic processing workflows. The developed solution holds immense potential for elevating the quality and efficiency of seismic processing projects while delivering notable time and cost savings.

By leveraging sophisticated machine learning algorithms, the initiative yielded an auto-picker that autonomously generates stacking velocity fields, marking a substantial technological advancement over traditional manual methods. Velocity analysis, typically labor-intensive and time-consuming, now benefits from ML-driven automation. The new physics informed ML-based algorithm provides a robust initial stacking velocity model, serving as a robust baseline for expert analysts, thereby enhancing both precision and efficiency. Automating this critical aspect of seismic processing substantially reduces the time and effort required to produce accurate velocity models, accelerating project completion and enabling project teams to concentrate on higher-value tasks.

The project culminated in the creation of an innovative algorithm that autonomously computes stacking velocity functions for each Common Midpoint (CMP), effectively addressing challenges posed by noise and multiples. This algorithm leverages a High-Resolution Sparse Radon-Based Velocity Spectrum, which enhances the precision of velocity analysis, enabling detailed and accurate stacking velocity functions even in complex seismic environments. Additionally, a Deep Learning-Based Normal Moveout Tracker, leveraging a novel deep learning model trained on synthetic seismic data using unsupervised learning techniques, introduces a more direct higher-order coherence measure for deriving stacking velocity, surpassing conventional measures such as semblance and cross-correlation that can be ambiguous in the presence of noise. The algorithm also incorporates automatic Physics-Based Multiple Detection, further refining the velocity computation and ensuring reliable results.

Extensively tested on land and marine 2D datasets, the algorithm consistently delivers rapid results that align closely with traditional manual stacking velocity analyses, underscoring its efficacy and reliability in enhancing seismic processing workflows.

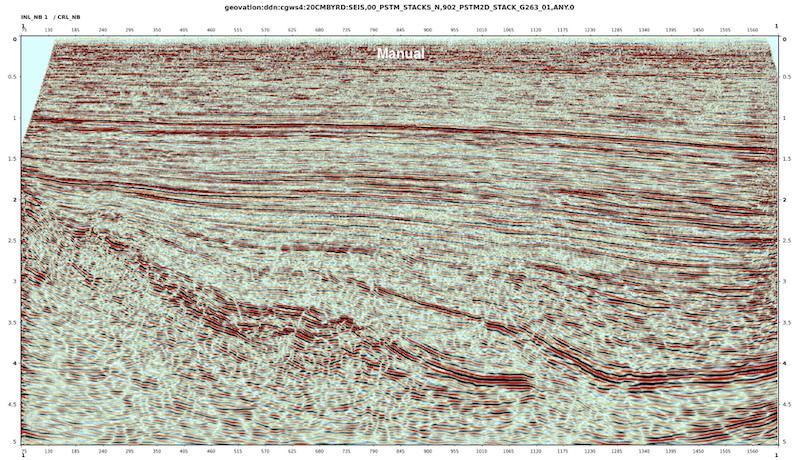

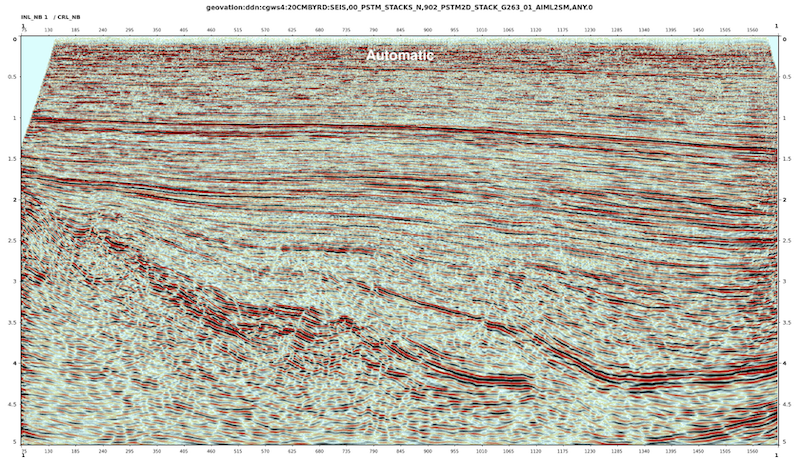

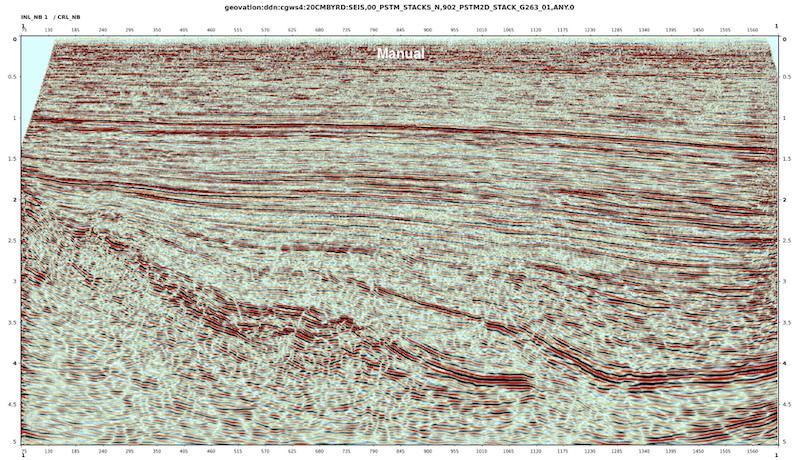

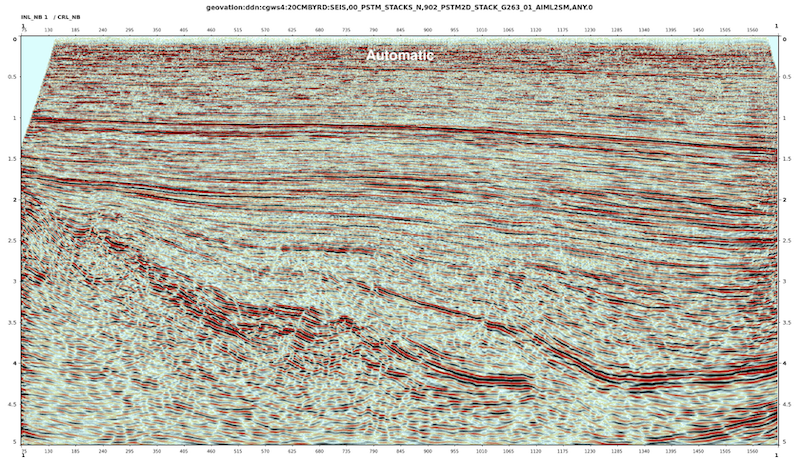

The images below present a comparison of stacks from a 2D line from a land survey. It showcases the stack generated using a manually picked velocity field alongside that produced by our automated solution. We have conducted similar tests across diverse geological settings, including the Eastern Offshore, Cambay Basin, and Frontier Basin, with consistently impressive results.

This advancement promises to significantly accelerate seismic data processing, potentially revolutionising internal practice.

The comparison reveals striking similarity between the "Manual" and "Automatic" stacks, underscoring the efficacy of our tool. At minimum, when integrated with our processing software, this innovation promises to provide an excellent initial velocity field, serving as a robust foundation for further refinement by processing analysts.

Developed entirely in-house, our tool is implemented in Python and harnesses the power of GPUs for optimal performance. We are currently pursuing patent protection for this groundbreaking technology while simultaneously developing plugins to seamlessly integrate it with our various internal processing software platforms.

This advancement not only promises to dramatically reduce processing time but also to enhance the overall quality and consistency of seismic processing, potentially setting a new internal standard for efficiency and accuracy in automatic velocity field generation.

Modified on by Jayanth Boddu 02F71794-8F71-4622-6525-7BA70043E8EB Boddu_Jayanth@ongc.co.in

|

I recently wrote a blog on my analytics website on predicting sonic logs using ML & Deep Learning Algorithms. I have been looking for ways to embed code here , so far the best way I've found is using an iframe. So here is the complete analysis with code. I hope it inspires someone to try it on their own. You can also read it here

Modified on by Jayanth Boddu 02F71794-8F71-4622-6525-7BA70043E8EB Boddu_Jayanth@ongc.co.in

|

Hydrocarbon exploration is strategic to the sustainability of economies of developing nations, with hydrocarbons still contributing to a major proportion of their energy basket. ( Ex : India , 30% — IEA 2020 ).

Understanding and characterisation of sub surface structures is paramount to evaluating new hydrocarbon ventures and undertaking exploration and development. Sub surface imaging is the de facto standard and an essential first step in building models of sub surface. Imaging is carried out through seismic surveys in which acoustic waves are sent through earth's surface and reflections are recorded using precision surface equipment. Chaouch and Mari 2006 provides a great introduction to seismic acquisition terminology.

Discovery of potential hydrocarbon zones is largely dependent on the quality of these seismic images. However, seismic images are inherently noisy ( Low SNR ) and band limited due to acquisition constraints and instrument limitations.

Noise and other image artefacts introduced by the constraints and acoustic attenuation of earth degrade the quality of seismic images , thereby increasing the uncertainty in exploration decisions.The aforementioned quality problem is further exacerbated by the growing industry trend towards deeper and thinner exploration targets, whose corresponding imaging shows higher degradation , thereby increasing the risk of skipping potential reserves. Hence, enhancement of seismic images is a primary endeavour in the exploration workflow.

This article briefly explains a few facets of the problem of seismic image enhancement and focuses on the recent applied deep learning research undertaken in this area. I hope this article brings readers up to speed on the recent research and generates interest in application of deep learning to the problem area.

The rest of the article begins with a brief treatment of seismic images and seismic image quality and explains two sub-problems of seismic image enhancement - seismic resolution & noise contamination. This is followed by a summary of methodology , major contributions and performance metrics used in the recent research focused on applying deep learning to seismic image enhancement.

Seismic Images are spatial records of reflected acoustic energy from the boundaries of various sub surface layers of earth. These records are a primary source of information that feed into seismic processing workflows which estimate various structural , topographical and geological characteristics of the sub surface. They are very important in estimation of quantity and quality of hydrocarbon reserves. The product of seismic acquisition over a region is a grid of 2D / 3D slices of seismic images which are then approximated as a 3D seismic image cube of the respective region. As mentioned earlier, the quality of seismic images affects resource and capital intensive exploration decisions. There are two major factors that affect seismic image quality and seismic interpretation results — Seismic resolution and Noise contamination.

Seismic resolution is a measure of minimum separation between reflection events — spatially and temporally. The minimum separation determines how close two events could be so that they are sufficiently distinguishable from each other i.e resolved separately. Seismic resolution is dependent on the signal bandwidth of the seismic source, sensitivity of receivers, density of seismic acquisition (i.e the number and separation between sources and receivers). Vertical seismic resolution determines the thickness of the thinnest bed that is distinguishable whereas horizontal seismic resolution determines the minimum lateral dimension of a feature , for the feature to be distinguished as a single interface. A high resolution seismic image is one where thin edges and shapes can be discriminated from each other. The edge thickness and shapes , among others are important information used by experts to interpret 'bright spots' with hydrocarbon potential.

Li et al., 2021 describes two potential categories of techniques for improving seismic resolution. High Density Acquisition attempts to increase the horizontal seismic resolution by increasing the density of seismic image slices per area by using a larger number of sources and receivers per area. Broadband seismic technique increases the vertical seismic resolution by in- creasing the recording bandwidth. BroadSeis is a popular product and service of this category. Although these techniques have showed improved seismic resolution, they have drawbacks like — high capital and operational expenditure.

Noise is another facet of seismic image quality. Noise is any unwanted and un-interpretable energy found in seismic images. Seismic image quality is hugely affected by the amount of noise contamination during seismic surveys.Sources of noise could be environmental interference, human activity, power generation etc.Noise affecting seismic images is broadly classified into coherent and incoherent noise. Incoherent noises are random noises caused by environmental interferences - Ex: airwaves.These noises are assumed to be Gaussian or white.Coherent noises are caused by seismic sources. Several coherent noises exist. For example, linear noise or ground-roll noise, multiple reflections i.e. each receiver receiving multiple reflections from the same sub surface boundary.

Noise contamination causes blurring and other image artefacts like aliasing effects in seismic images. These effects prevent accurate processing and interpretation of seismic images. Noise attenuation in seismic images can lead to improvement in perceptual quality and hence seismic interpretation. Traditionally noise sources are modelled and filters are constructed to remove each type of noise. However, field estimation of noise which is needed for noise modelling , is found to be resource intensive and specific to each field. Previous noise attenuation methods based on filtering techniques differ for each types of noise. Custom filters are needed to be constructed based on the characterisation of noise contamination and parameters need to be tuned to achieve reasonable attenuation. Further, filtering based noise attenuation techniques need sequential processing. Hence, there is need for uniform framework for general noise attenuation which can perform noise attenuation without prior knowledge of noise.

The field of deep Learning has enabled substantial improvements in several computer vision tasks by learning hierarchical representations of data. A few extremely successful DL architectures include - CNNs , GANs. Image enhancement was previously perceived as a problem in the domain of digital image processing and solved by constructing custom filters i.e. Image enhancement was previously considered a filtering problem. However, image enhancement using filtering has the disadvantage of image degradation modelling and parameter tuning to fit specific degradation. With the success of deep learning in CV , it could be applied to Seismic image enhancement problems, by formulating them as image translation tasks , converting the filtering problem into a prediction problem. In particular , seismic image enhancement could be considered a problem of improving seismic resolution and attenuating seismic noise. The problems could be framed as Image Super resolution problem and Intelligent de-noising.

Super Resolution (SR) is the image processing procedure of approximating a High Resolution Image (HR) version of a given Low Resolution image (LR). The SR paradigm considers the LR image be a degraded version of an HR image, where the degradation characteristics are unknown. The degradation could be loss of detail, down sampling, corruption etc. Deep Learning solves the SISR problem by supervised learning of a model of the unknown degradation function that maps a LR image to HR image. The model is trained on a dataset of LR, HR image pairs. Such a trained model can be used to create HR images of previously un- seen LR images. A brief survey of Single Image Super Resolution which deals with generating a HR image from a single LR image , is provided by Yang et al., 2019

Among the architectures proposed for SISR, SRGAN proposed by Ledig et al., 2017 was hugely successful in preserving the perceptual quality of natural images at up-scaling factors of 2x & 4x, by defining a new objective function which accounted for perceptual similarity rather than similarity in pixel space. The authors employed a deep residual network (ResNet) with perceptual loss calculated using the feature maps of the pre-trained 19 layer VGGNet. The training was carried out in an adversarial manner. In addition to Peak SNR (PSNR) and MSE (Mean Squared Error), the authors utilised a different metric to measure the quality of resolution enhancement called Structural Similarity Index (SSIM), as proposed by Wang 2004.

Dutta et al., 2019 proposed a modified SRGAN to enhance and de-noise 2D & 3D seismic images. To augment the image enhancement, the authors used Conditional GANs, where low resolution images are concatenated with a one hot encoded lithology class label at different depths. The conditional information was added to the input images in the form of additional channels. Up-scaling layers previously proposed in SRGAN were not used. The network was trained separately on 3D, 2D and corresponding sets with conditional lithology introduced at different depths. Image degradation, to be learnt by the network, was simulated by passing HR images through 5Hz LPF filter and adding 50 % uniform random noise. Conditional GAN methodology showed a PSNR gain of 12.21% and SSIM gain of 19.84% over SRGAN , when trained on 2D seismic images and 10.9% PSNR & SSIM gain when trained on 3D seismic images.

Halpert, 2018 adopted DCGAN architecture , previously proposed in Radford et al., 2015 for de-noising and resolution enhancement of seismic images. The author reported increase in higher wavenumber spectra in enhanced images compared to ground truth and LR images and qualitative results in improvement of perceptual quality. Li et al., 2021adopted CNN based architecture, a variant of U-net with sub pixel layer and residual blocks. The work enhanced synthetic seismic images with an emphasis on improvement of thin layers and small scale faults. The author trained the network for super resolution and de-noising using L1 loss coupled with MS-SSIM (Multi Scale - Structural Similarity Index). LR, HR pairs used were created by degradation emulated by adding random coloured noise with SNR ranging from 4-14. The work further provided evidence of image enhancement by showing improved performance on a fault detection task.

The task of de-noising seismic images has been successfully performed by both supervised methods as shown above and also unsupervised methods. A rigorous review of seismic image de-noising using deep learning has been provided by Yu et al., 2019. In particular, Chen et al., 2019 proposes a simple unsupervised auto encoder with a sparsity constraint which learns the representations of seismic signals from noisy observations. This method uses a simple three layer auto encoder and has been shown to be very effective in automatically removing spatially incoherent random noise. The key design insight of the above de-noising network is the introduction of a regularisation term based on KL divergence to the objective function of the hidden layer which helps the network to dropout non-trivial features that correspond to noise. Moreover, this work proposes the use of Local Similarity metric to quantitatively measure the signal damage due to de-noising. Such a measure of de-noising accuracy and reliability has not be used other recent studies. However, Chen et al., 2019 shows efficacy in de-noising only incoherent noise. The authors have also shown that quality of seismic de-noising is affected by the size of image patches used for training. The author explained this phenomenon by the fact that large patch size might impede the network from learning small scale features and small patch size would prevent the network from learning meaningful features. None of the other approaches have considered this phenomenon.

To summarise, this article breaks down the problem of seismic image enhancement into seismic resolution improvement and de-noising. It formulates these problems as prediction problems of image translation nature to be tackled by deep learning methods. It covers the relevant recent research in super resolution and de-noising. Note that the research reviewed is by no means exhaustive and I welcome any feedback and suggestions in improving my understanding of the subject.

I'm currently pursuing this problem area to write a master's thesis. You can help by suggesting papers and methodologies discussing important ideas related to seismic image enhancement that I may have missed.

|

This is a blog about the evolution of my thought process about building websites to maintain and disseminate information on inventory over the past three years. It has been a long journey from tinkering with databases and javascript and finally discovering the zen of csv files and python.

How does one manage inventory? There is no denying that everyone has this problem here. Even after commissioning special storage cabinets and labelled shelves , boxes, pouches and keeping spreadsheets on them, we were at a loss.

In spite of all that work of organising, inventory still has been a game of darts. We still had issues like sending the wrong parts to field. Field personnel and us fumbling on the part names, matcodes, manufacturing part numbers, purchase orders. Then there were other problems - like differences in packaging, similar names with different uses and the list goes on.

And we were back at how does one manage inventory. If you have noticed, questions like these have a way of starting slowly, seeming like a wrestle, followed by periods of being swept under the rug and one day, ending in the monster suddenly re-surfacing in intimidating forms.

Hyperbole aside , we noticed that there were several facets to the same problem. Let me see, if I could list all of them.

-

Not everyone uses a certain spare and hence not every one knows every thing. This is a Scope Problem.

-

Not everyone uses the same terminology. This is a Language Problem.

-

A person who knows and uses a spare part, might not necessarily be the best at describing it . This is a Language Problem too.

So what do you do when you decide to deal with the problem ? Like any good accountant - you look for the ground truth and build from there. It started with a simple and unoriginal idea. Descriptions are causing all the confusion, what if we could see a picture. May be, magically , this inventory problem would be solved.

So, for the next few weeks, our technicians religiously catalogued all our inventory all the while taking pictures of everything. For a while, the store looked like a forensics lab.

Initially, we used a laptop with a spreadsheet for data collection and a mobile camera for pictures.

Later, we graduated to using a simple google form. [ I assure you we gave due regard to the confidentiality & Integrity of the information ]

Now that we had all the information, our next step was to look for ways to make it available to everyone in the department. Our requirements were simple

- Everyone in the department can search for a spare part and find a picture, current inventory , location and any other meta data.

- The store keeper can add new spares and update inventory.

- If you are not a store keeper, you cannot make any changes to the inventory.

Right around this time, I had finished reading 'Eloquent Javascript' and a few books and tutorials on the MEAN stack including 'Web Application Development using MEAN stack'.

So charged with the problem and a means to solve it, I did the following which I present to you as Paradigm #1.

Paradigm #1 :

Build a full featured web site for tracking inventory. One where you could add, remove, update inventory. One with a login window, running on our local network.

So that's what we built.

- A website built using HTML, CSS, Javascript using the Angular 2 framework. I relied heavily on Bootstrap for styling. The website had three pages. Add, Update, Search. The search page would let you type in a keyword and it returned matches for matcode, part number, description, meta tags etc.

- All the data was stored in a No SQL database called MongoDB. Schema was really simple, one to two nests.

- Express.js Server. All the javascript code was heavily borrowed. We had created simple REST APIs and middleware for the Create-Read-Update-Delete loop. We tried integrating passport.js for authentication but eventually gave up. It had some 'session' issue that we could not solve.

- Node.js — Well although I mentioned this in the end, this was the basis for everything. My familiarity of working with Node.js in one of my previous projects really helped. [More on that in a different post.]

It look us about 3 week - one to two hours on each day , of tinkering got it running. Mostly everyone was happy. Except the guy who staked his life on the efficacy of excel sheets. This was uncharted territory for him and mostly he was worried that he would get a few weird columns in the excel sheet. We used mongoexport to export the database into excel sheet.

Well, looking back, the 'photo' idea seemed useful. As always, the person who appreciated it the most was the one who faced the most problems on a minute by minute basis, THE STOREKEEPER.

In its entirety, these are problems paradigm 1 solved , aka PROs :

- Availability

- Easy Inventory management

- Visibility

CONs of Paradigm 1 :

- Javascript Web App Development, especially Angular 2 is overkill for this application for a small user base.

- Each feature like search, pagination, mongodb integration took us time.

- Huge codebase. We literally spent many afternoons trying to solve a tiny bug.

- You cannot make batch updates to inventory. I mean you could if we wrote another page for that. Think of a basic Javascript TODO with a submit button.

- You need two different applications — a client app and a server app.

After this adventure, I also built a single terminal biometric attendance system using javascript.

Fast forward to March 2020, I began to take obsessive interest in data science. I entered the world of rectangular data aka dataframes. I was rapidly learning data manipulations and visualisations using Python packages.

Let me take a moment and acknowledge the delight of python.

For people coming from C, Java, Javascript, they are taken aback by the simplicity of syntax. Python one-liner codes are so popular, and there's so many of them, people have written books on them. Here's one. I can't resist but show you the python code for swapping two variables. ⇒ a,b = b,a. I could tell about list and dictionary comprehensions too , but you'll figure it. They are simple and elegant. If there's one thing I'd want you to take away from this, it would be : LEARN PYTHON.

In fact, let me introduce you to the guiding principles of Python language.

- Beautiful is better than ugly.

- Explicit is better than implicit.

- Simple is better than complex.

- Complex is better than complicated.

- Flat is better than nested.

- Sparse is better than dense.

- Readability counts.

- Special cases aren't special enough to break the rules.

- Although practicality beats purity.

- Errors should never pass silently.

- Unless explicitly silenced.

- In the face of ambiguity, refuse the temptation to guess.

- There should be one—and preferably only one—obvious way to do it.

- Although that way may not be obvious at first unless you're Dutch.

- Now is better than never.

- Although never is often better than right now.

- If the implementation is hard to explain, it's a bad idea.

- If the implementation is easy to explain, it may be a good idea.

- Namespaces are one honking great idea—let's do more of those!

A popular library for data manipulation in python is Pandas. You can import data from csv, xlsx, images, sql db, api, pdf into pandas and then filter, search and visualise. For example, if you have list of your inventory, it is really easy to do statistical analyses. If you want to know what you can do with pandas ? Look here

Getting back to our inventory problem, our application started misbehaving. Unresponsive database requests. Turns out a lot of packages we used to build our inventory app have been deprecated. We were living under a rock.

Now with these new found super powers, I started to rethink the inventory app problem. We already had the data. Our problem was to somehow use python and pandas and built a new website so that everyone got access.

Enter 'streamlit'. I think it was truly serendipity that brought us together. One lazy Sunday, I read a tweet about how they needed an equivalent of streamlit for Julia. Julia a high performance and scalable data analysis language. It claims to combine the speed and scalability of C with 'Python'y syntax. This tweet stoked my interest in streamlit and I spent the next few hours being blown away by the simplicity of it. With streamlit, you could build 'data' apps i.e websites backed by any of your favourite data containers like csv, excel, sql etc. One of its many great features is , it runs on python. Data scientists have been using streamlit to build and show case their data experiments. If you want to see what is possible with streamlit, go here. [have patience, it takes a while to load]

This is already too long for a single read, let me cover my streamlit experience in the next post. Stay tuned.

Modified on by Jayanth Boddu 02F71794-8F71-4622-6525-7BA70043E8EB Boddu_Jayanth@ongc.co.in

|

Happy New Year to everyone.

I'm Jayanth and I work with Logging Services, Mumbai. Welcome to the first post of my new blog 'New Horizons'.

I decided a while back that I'm gonna write more in 2021. Partly because I keep having all of these ideas I want to share and they have a way of slipping away . And mostly because, I like the feeling of being routinely surprised by something I've written a while ago and the fresh perspective it brings. Besides, I've been mulling over the reciprocity of the triad of writing, reading , thinking and this blog seems like both an exercise in the triad and a creative outlet.

I hope to write about my current interests and obsessions - Note taking , Data Science, Web Technologies , Privacy & Logistics - not necessarily in that order. As my first post, I wanted to share the principles of building a life changing productivity system I learned from Ali Abdaal.

So here goes.

All of us absorb a lot of interesting information from emails, blogs, videos, books, podcasts etc. I've always felt that most of this information does not reach its usefulness stage. And an extreme case in this is the proverbial out of sight, out of mind. You probably know people who have a million browser tabs open all the time, not closing them for the fear of forgetting what they just consumed. Our brains have a way of holding on to information that is most important to the current predicament. And there is no mistaking that the predicaments are plenty. If you're like me, who handles many disparate tasks to the point of turning into a context switching junkie, you must be thinking about a better way to organise everything you learn and catalogue it in a way that is meaningful / useful in the long term. All of this while reserving our brain's capacity to concentrate on the most important tasks at hand. Think of a personal wikipedia of information & insights and more.

Any efforts in this direction could be dubbed as 'building a second brain'.

This is repository of information and insights that you could fall back on - to make decisions, to draw inspiration and to get stuff done. In the long term, this becomes a source of abundance that you could start your projects with, instead of starting with a blank canvas. The last part I think is immensely useful, given there only so much CHRONOS that goes around. 'Second Brain' is a super charged productivity / knowledge management system. Its about creating a repository of information that you could use to complement your brain. This is productivity system that Ali Abdaal claims to have changed his life. The overarching principle behind building a second brain and gaining the most out of it, is the acronym - CODE : Capture - Organise - Distill - Express. Capture all the information you finding interesting. This could be your own ideas or the ideas that you learn from content you consume. Capturing could be in various ways - voice memos, excel sheets, note taking apps etc. Catalogue all the ideas and give them context. Understand the essence of the captured information. Summarising the content , writing about it is a great way to churn out the essence. And finally the only way you own the information is by making something of it. This could be a blog, a personal project or any creative endeavour.

With due regard to the above overarching principle, there are 10 fundamental principles of building a second brain :

-

Borrowed Creativity

- Creativity is all about remixing stuff. It is about taking stuff that's already been made and adding your own new perspective to them.

- Its like what Pablo Picasso said , 'Good artists copy, great artists steal'

- It's one of the key ideas of second brain system since organising your knowledge helps in finding connections that may have not existed / discovered earlier.

- Besides, its easier and quicker to make connections using a repository of information than trying to visualize in your head.

-

The capture habit

- Your brain is to have ideas, not store them. Capture all the ideas you have, coz your brain might not hold them on for long. These could be ideas that you get when you were jogging, taking a shower. Note these ideas down

- Create mechanisms to capture ideas. For example, use a digital assistant on your phone while driving , voice memo apps.

- I get most of my creative ideas during or right after some physical exercise, so I intend to use Siri to capture them.

-

Idea recycling

- Ideas are re-usable.

- Keep track all of the content you are creating and all the ideas that are being used in them.All the blogs, websites, apps , emails and any creative endeavours are to be documented so that they could be reused somewhere down the line.These ideas then become building blocks that you could probably re-use for newer stuff.

- For example, the proposal you recently made to your boss could be taught as a course. Or a code block from a web app for inventory could be used for a new website you might build.

-

Projects over categories

- Associate all the information and learnings to a particular project instead of categories. For example, it is more beneficial to associate a new blog you read on logistics to your shipments project rather than adding it to a folder called Logistics.

- Such categorization doesnot help because it is not directedly useful and also it might lead to loss of context over time.

-

Slow burns instead of heavy lifts

- A heavy lift is like picking up that one task and pausing everything else until you finish it. It could be thought of , as a series way of handling tasks. For ex : Going to a cabin the woods for three weeks to write a book. Or dedicating an entire week for just building a new feature into a website.

- Slow burns are like having multiple projects at the same time , but being handled slowly in parallel. For example, doing a couple of courses and building a product specification, writing a project proposal, all in parallel and each task in small chunks and progression. So overtime, Instead of doing 1 big project one at a time, you could do 10 different projects all at the same time, slowly until they complete.

- Slow burns are beneficial in the sense that they would let you capture all the things that you learn along the way and use them to complete the tasks. Overtime, you could read, listen and watch ideas and come up with ideas of your own and use these to further the slow burn projects.Instead of a heavy lift, through a slow burn , you could slowly capture ideas from the internet and catalogue them in your second brain

- Slow burns are beneficial when you have an effective way of capturing all the ideas, notes and material in one central location and somehow linking this repository to the something that tracks the progress of each burn. Also, if the information is organized in one place, it takes less time to consolidate them and apply them to the project.

-

Start with abundance

- Never start a task like writing an proposal / email with a blank page.

- If you had a second brain, you could start with abundance of articles, notes, inspirations that you have annotated and collected over time. You could use these bits to conjure interesting arguments / content . And ultimately, this could make a really good article, blog etc.

- This is exactly like sifting through your brain for ideas / past experiences and you are basically starting any task with the wealth of information that you have decided that its worth saving in the past.

-

Intermeddiate Packets

- All the tasks that you do are made up of intermeddiate packets. For example, any procurement proposal / webpage specification has certain blocks that are interchangeable and useful for several others.

- Organise and create such re-usable intermeddiate packets.

- This would not only make the task easier , it would aslo help starting with a task.

- Ensure that your second brain is built in a way that makes it easy to think in terms of tasks as intermeddiate packets

-

You only know what you make

- You only internalize the stuff that you create. That's what affects your lives and is probably useful in the long term. It doesnot matter the number of podcast that you listen to , the movies you watch , the books you read unless at the very least you write summaries / reviews, you use them to build things, write intermeddiate packets, catalogue them into your second brain

- Always make notes of all the content that you consume.

-

Don't assume intelligence on the part of your future self

- The way to think of your second brain is that the effort you put in now would help your future self.

- Dont assume too much intelligence in your future self, create context for everything that you record.

- For example, if you're writing a daily log about the activites of a particular day with the objective of using them write your self appraisal comments at the end of the year, record in a manner that explains the content and context well, assuming no knowledge.

-

Keep your ideas moving

- This is the anti perfectionist take on the entire building a second brain.

- Do not fall into the trap of building the perfect note taking, habit tracking, project structure.

- The most important thing is the output. It's not about the perfect note taking system, its about improving the system overtime and focusing on the output.

Note that these ideas are not mine at all. This is an endeavour in 'you only know what you make'. If you prefer the video format, check out Ali Abdaal's youtube channel.

My personal choice of second brain was Microsoft Onenote in 2019 and 2020 where I extensively catalogued all my work and personal knowlege. This helped me craft some interesting emails and wrap my head around my routing tasks and workflows. Recently, I spent a few weekends tinkering with Notion because of some glitches with OneNote and I'm convinced that it is the best note taking / knowledge management solution out there for the flexibility and organisation capabilities. Feel free to contact me if you want to discuss more about the second brain idea or just setting up your Notion workspace.

Wish you happy days ahead.

Modified on by Jayanth Boddu 02F71794-8F71-4622-6525-7BA70043E8EB Boddu_Jayanth@ongc.co.in

|

Artificial Intelligence (AI) is the ability of machines to perform tasks that conventionally required human cognition. AI is estimated to create economic value of $ 13 trillion by 2030 with an impact equivalent to $ 173 billion to oil and gas industry. In light of the huge disruption potential of AI, ONGC Management has integrated the digital use case of 'Predictive Exploration' as part of ENERGY STRATEGY 2040. AI is the core component of Predictive Exploration.

I write this article in an effort to propose 9 potential use cases of predictive exploration in well logging. The rest of this article provides a brief summary of AI, current AI opportunities and trends in E&P industry, upstream AI adoption themes. This is followed by a detailed description of predictive exploration use cases in well logging and current limitations of AI in geoscience.

Artificial intelligence is a sub field of data science , the science of extracting knowledge & insights from data. Machine learning (ML) , an AI paradigm is attributed to most of the recent progress in the field of AI. ML teaches machines to perform specific decision tasks without explicit programming. ML techniques can be broadly classified into supervised ML and unsupervised ML. Supervised ML can be succinctly summarised as — teaching a machine to learn a mapping from Input to output ( A to B ) by training it with several examples of (A,B). Once the training is complete , the machine is said to have learned a 'model'. This model could then be used to predict B, given A.

Unsupervised learning is useful for initial data exploration to find interesting structures in data.

ML predictions ( if reasonably accurate ), could be used for automation of tasks in existing human centric workflows. Hence, AI could be understood as a general purpose technology for automating tasks in a workflow. The deliverable of an AI implementation and Supervised ML in particular is a task specific model, that would automatically predict an output for a given input. It is necessary to emphasise that current AI solutions are very task specific. Models trained on one task are suited to performing the said task only.

In its current state, AI can automate any task / decision which takes a human about 1-2 minutes to make. But there is a negative test of appropriateness of applying AI to a particular task — Does the task consist of well defined rules and / or empirical relationships ? If yes, AI might not bring value to the task. AI is useful for tasks with a lot data ( examples of input - output relationship ) and complex relationships between input and output. The value that AI brings to these tasks is learning patterns and relationships present in existing examples and producing statistically consistent outputs given new inputs. Mathematically speaking, ML models are universal function approximators that learn complex functions from observations. This makes ML models better at learning from 'unknown knowns' and 'known knowns' present in data and predicting outputs better and faster than humans.

From an economic standpoint, AI makes prediction ( a fundamental component of intelligence ) cheaper. Since prediction complements judgement which drives business decisions, AI has been transforming businesses by automating repetitive tasks , accelerating workflows and making the businesses more data driven.

The ongoing evolution of the E&P industry, dubbed as Oil & Gas 4.0 has one core goal — to achieve greater business value through adoption of advanced digital technologies. Among these, AI & ML have shown huge potential in improving efficiency by accelerating processes and reducing risk.

In particular, the upstream industry is capital intensive and characterised by high risk and uncertainty. Further to exacerbate the challenges, upstream processes rely on expert knowledge , involve subjective perception and experience based decision making, are time and resource intensive , sometimes have no objective measurement criteria.

Hence, there is huge potential for ML / AI tools to not only accelerate and de-risk processes but also establish quality baselines and bring consistency to decision making inputs in upstream.

E&P industry is already seeing a few broad themes in exploiting AI tools, namely reducing the 'Time to Value' of the gathered data and building a 'Living Earth Model' which automatically updates with new knowledge. Further, most use cases have been targeted towards

- Data driven studies as a faster and less accurate alternative physics driven studies.

- Providing baseline interpretation metrics for subjective results.

- Automating data quality checks

In line with the industry needs, the most successful use cases of ML / AI in upstream have been the ones which provide tangible process acceleration benefits and significant reduction of human errors in mapping hydrocarbon targets.

- Tools for automated mapping of reservoir rock properties over an oil region ( accelerated from several weeks to several seconds )

- Tools for extracting geological information from well logs ( 100 + times speed up )

- Tools for rock typing based on images of rock samples extracted from wells ( ~1,000,000 times speed up )

As a compliment to industry adoption, there has been increased activity in the scientific community in applying ML to geoscience. Recent times have also seen a significant AI drive by professional bodies like SPE, EAGE, SPWLA, SEG. The number of quality research publications in scientific journals and conference proceedings has seen a steady rise. The industry's estimate of AI's current value can be judged by high reward ( $ 100,000 ) open competitions like 'Salt Segmentation in Seismic Cubes'. This is further augmented by community contributions to developing ML applications with business impact. For instance, the code implementations of most competitions have been open sourced to tinker and build on.

AI in Well Logging

Well logging gives precise information about various physical properties of the sub surface along the well bore, with measurement resolution in centimeters. Well logging employs sensors which measure electrical resistivity, natural gamma ray intensity, response to magnetic excitation, neutron density and some others. Petrophysicists use well logging data for their interpretation routine, including rock typing , estimation of porosity and permeability and estimation of relative fluid saturation along the well bore. Petrophysical interpretation is a time consuming process and its results strongly depend on the domain expert. ML can reduce time and bring consistency to interpretation results.

In view of this, I propose the following AI use cases of Predictive Exploration in well logging

- Automatic Reconstruction / generation of missing sonic logs

- Automatic Lithology prediction

- Generating Density logs from other logs

- Automatic NMR generation

- Predicting porosity, permeability and water saturation using well logs

- Auto Interpretation of CBL Logs

- Auto log QC

- Log Recommender System

- Auto Sonic Correction using VSP & Facies

( Note that research papers with various levels of evidence are hyperlinked for use cases # 1-6 . Use cases #7-9 seem very feasible in view of their similarity with the other use cases. )

Automatic generation of missing sonic logs is very useful to generate sonic logs for old wells to feed into well-seismic tie workflows. Automatic lithology prediction can be explored as a fast alternative to detailed lithology analysis carried out in core laboratories. Generating missing density logs using existing logs can be used to provide missing data for feeding into reservoir characterisation workflows. Automatic NMR generation namely MPHI (effective porosity), MBVI (irreducible water saturation), and MPERM (permeability) from conventional well logs can be used to improve the estimation of economically recoverable hydrocarbons. Estimation of porosity, permeability and water saturation from well logs is a faster alternative baseline to the empirical calculations. Auto interpretation of CBL logs not only accelerate cement integrity checks but also brings consistency to expert driven analysis. Auto Log QC can be employed provide an automatic mechanism to check log quality before ingestion into the G&G data warehouse.

Log Recommender system is a recommendation engine aimed to reduce the volume of physical log measurements. The current state of the art hypothesises that most logs could be reconstructed or generated from existing well logs. Hence, a recommender system can be built to provide recommendations on the logs to be recorded based on the log reconstruction accuracy / quality.

Auto Sonic Correction using VSP & Facies aims to provide environmental corrections to sonic logs recorded in wells where VSP was not undertaken, by learning the same from existing VSP survey data.

For the implementation of # 1-4, an ML model could be trained specific to each use case. The ML model is trained on logs recorded in existing wells in the field to predict logs / properties in different well. For example, let us consider the use case of Generation of missing sonic log. Say, Wells A & B have gamma ray, density and sonic logs and Well C has gamma ray and density, but sonic log is missing. One may create a dataset of Well A & B with inputs as gamma ray log and density log , output as sonic log. The trained ML model can be used to predict sonic log of Well C using the gamma ray log and density log recorded in Well C.

ML models learn from underlying statistical relationships between existing inputs and outputs to predict outputs for new inputs. In the case of well logging, in the fields that exhibit adequate homogeneity in the characteristics of sub surface through out its extent, the existing field data could be used to predict field properties.

For the use cases # 5,6 , ML models can be trained on datasets created from existing well log data where the needed output is already ascertained by an expert. For example, for auto interpretation of CBL logs, experts could divide the log data into equal segments and assign a cement quality index of 1-6 for each segment. ML model could be trained to predict cement quality index for an input of log curves. The trained model could be used to automatically predict cement quality on new CBL log data in the same field. These predicted results could be used as rapidly generated baseline results for further interpretation. Auto log QC can be developed in a similar manner.

Although more advanced use of AI is possible, the above use cases represent the 'low hanging' fruit for the following reasons.

- The first challenge for ML is creating quality datasets. Datasets with well defined structure are an easy target for building models that scale well. Well logs are almost structured data. ( Data structure is constant for each service / technology provider )

- Our G&G data warehouse already stores well logs in a well structured format. This could be leveraged to create field specific models. Further, some predictions can be automatically performed in the background for each log upload and generated as files for immediate consumption.

Please note that this article only covers some possible aspects of the geoscience parts of well logging. Operations and business process related use cases are not covered.

References :

I hope this helps each stakeholder in the conversation around the current digitalisation initiative.

I am excited about this new venture of ONGC and would be delighted to explore these use cases further.

|

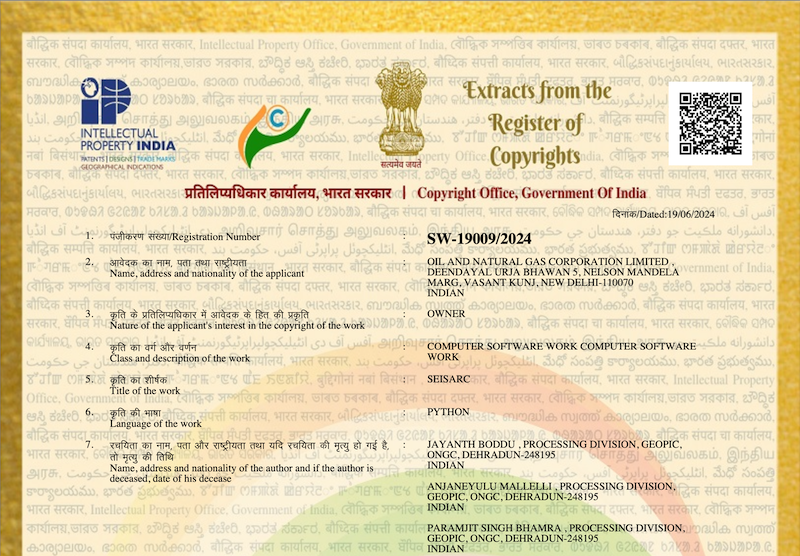

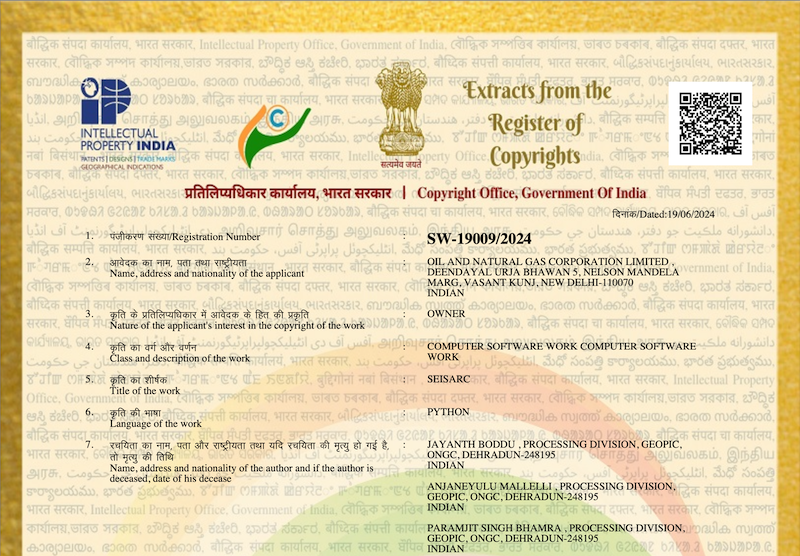

The Copyright Office, Government of India registered a Copyright (# SW-19009/2024) for the groundbreaking in-house software, SEISARC developed by our team at Processing Division, GEOPIC.

SEISARC is one of the first copyrighted innovations from the Seismic Processing team @ GEOPIC. This Python-based, multi-system automation tool is designed to simplify the archival and delivery of large-volume seismic processing projects. As exploration acreage expands and data resolution and diversity of processing deliverables increase, SEISARC ensures the integrity of seismic processing deliverables, optimizes resource utilization, and boosts productivity, giving our team more time to enhance imaging deliverables.

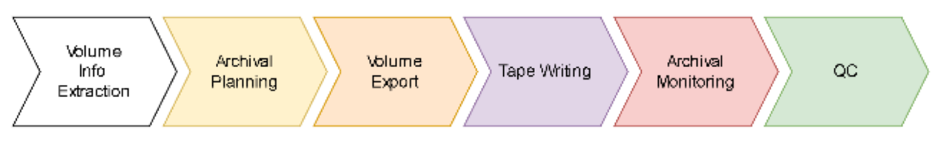

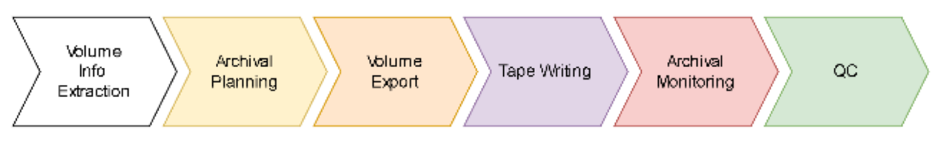

In large-volume projects, the data size exceeds the storage capacity of a single unit of tape media technology currently available. Consequently, the archival process involves partitioning the project volume into multiple SEGY files, each SEGY containing a subset of the overall project data, specifically data related to a range of inline (IL) lines. The IL range within each SEGY is determined based on the maximum capacity of the tape media. These SEGY files are initially exported to disk storage before being written onto tape media. To conserve shared disk space and compute resources, the volume export jobs are executed in phases. Each generated SEGY file is transferred from disk to tape using a Remote Tape Library (RTL) controlled tape drive. Following each tape write operation, a small subset of the written data is read back to disk and imported into the processing software for quality control (QC) of the archived data.

Currently, analysts manually manage data export/import jobs, RTL servers, and disk space. This manual intervention in repetitive tasks not only consumes valuable analysis time but also introduces the potential for human errors. To understand the scale of the problem, we could consider a typical marine seismic project covering 6000 sq. km with a fold of 50. The archival of this project requires approximately 350 TB of tapes for archival, involving around 84 export jobs and equivalent disk space. If the archival process were run continuously with four tape drives operating in parallel, it would take approximately 10 days, assuming each export job takes 5-6 hours and tape writing takes 3-4 hours per tape. Additionally, due to the use of different software and hardware systems for processing and archival, the archival process requires continual management of volume exports due to various constraints. These constraints include the upper limit on the number of data exports before exhausting the permitted disk space for the project and the need to maintain a minimum number of SEGY files ready for input buffering during tape writing to maximize the utilization of the available RTL infrastructure. Tape writing also involves multiple manual and sequential operations per tape, along with manual data dumps for archival QC and manual deletion of SEGY files to conserve disk space.

An ideal archival process is fully automated and integrates the different software (s/w) & hardware (h/w) components of archival. This automated pipeline efficiently handles the entire archival process from partitioning of the project volume into multiple IL ranges to exporting the corresponding SEGYs for each IL range. It effectively buffers export jobs and tape writes taking into account the limited disk space and tape writing hardware. It automates the hardware control operations of assigning-loading-writing-unloading multiple tape media. Furthermore, such a process offers visibility of the entire archival pipeline including real-time updates on the current status and the estimated time of completion of the entire archival pipeline. However, implementations of the aforementioned ideal archival process are not uniformly found across processing software currently in use. Consequently, this leads to sub-optimal utilization of resources, increased manual work, and elevated risk of human errors. Additionally, in most cases, the archival infrastructure is designed to meet the average demand rather than peak demand. Furthermore, the infrastructure is shared between multiple projects with varying timelines. Hence, there is a pressing need for accelerating the archival process and optimizing resource utilization.

Moreover, each phase of archival has a few technological gaps. For example, existing processing software lacks essential functionality such as estimating the memory footprint of each inline when exported as SEGY, close integration of the processing software with the tape writing server, etc.

Hence, the team framed the manual intervention required in each phase of archival as an automation problem needing the integration of multiple systems – the processing software, filesystem and Remote Tape Library. To address this challenge, and minimize the time and manual effort involved in seismic archival, they built a Python-based command line tool. This tool simplifies & accelerates each phase of the archival process, offering a streamlined solution to enhance efficiency and reduce manual intervention.

Introducing automation to each phase of the archival process increases productivity, optimizes resource utilization and reduces the scope of human error. This shift would allow for increased focus on improving processing output while reducing the time allocated to archival tasks. Additionally, it enables the efficient utilization of disk space, tape drives, and the processor's time. Automation helps mitigate errors which contribute to time and quality losses -- such as an export job or tape write failures, occurrences of "right data, wrong headers," and duplicate IL range writing.

This work has led to a multifold increase in speed and a decrease in project time spent on archival. So far, the archival of 03 internal project volumes (120 tapes) has been carried out via this tool. The benefits envisaged are summarized as follows - Automatic one-shot computation of IL ranges and memory footprint before the archival phase (the task is completed in time order of 10s of hours ), zero trial and error in splitting volume into IL ranges as per tape capacity ( in a few seconds ). Creation of a complete archival plan in one go with the details of export jobs and the number of tape cartridges needed for archival ( in a few seconds ). This tool eliminates the potential human error in populating textual headers per tape cartridge. (potentially saving 3-4 hours of rewriting per tape). Further, it also automates the handling of RTL tape drives needed to write multiple tapes (replaces 8 operations by just 1 operation, saving ~ 4 minutes per tape write). It improves visibility by providing real-time status of all tape drives and the progress of tape writing.

The production code related to this project can be accessed at https://git.ongc.co.in/125049/seisarc-prod. Interested readers may contact me for a detailed technical description of the solution.

Modified on by Jayanth Boddu 02F71794-8F71-4622-6525-7BA70043E8EB Boddu_Jayanth@ongc.co.in

|

This is a second post in a 2 post series of my experience building inventory websites over the last few years. The first post talks about building inventory websites using MEAN stack and superfluousness of using MEAN for smaller inventory footprint / user base. If you haven't read the first post, you could read that here

In this post, I'd like to talk about a simpler version of inventory website (with just enough functionality) that you could build right now. I'll add my code at the end too.

The framework in question is called 'streamlit'. Streamlit is a python based framework to showcase your data science work developed with data scientists in mind. It natively runs in a web browser backed by a python script. And it is interactive - you can create and respond to user actions. If you know what Jupyter notebooks are (if you dont, they are a browser based execution environment backed by the terminal , again great for data science experimentation ), streamlit has what Jupyter notebook has , plus controls like text input, buttons, sliders etc.

Before we talk about why streamlit is the right framework, let me cover the bare bones of an inventory website in terms of database, web server and web page.

The fundamental building block of any inventory application is a data base containing all the information. This is the heart of the entire system. Think of this as CENTRAL STATE. Every user action either reads or updates this one central state. The user actions one could perform are usually called CRUD ( Create - Read - Update - Delete ). All the inventory actions could be composed using CRUD operations.

The second building block of an inventory application is a web server. Think of this as the brain of the system. It accepts requests from the user and sends responses. It is also connected to the database and does CRUD operations on behalf of the user. A web site is the interface for the user to get current inventory, update inventory , create new inventory items etc. When you enter an address in the browser bar, that's the first request made to the web server. It responds with a website. Now once the browser has the website, you can interact with your inventory through it. When you click a button, push a slider, select an option etc, your website sends a request to the web server on your behalf. The server has some pre defined rules for each request. Some requests make the server request inventory from the database and send it to the webpage.

So in summary , you need a database, a web server and a webpage. In the MEAN world, you use a MongoDB database, Express.js server , Angular 2 framework for building a website and all of this built the nodejs environment. If the above jargon doesnot make sense to you, that's alright. Replace everything by javascript and more javascript to give it some shape.

Now let's talk about streamit. First things first, it's based on python. Oh wait, you did go through the initiation into python in the previous post.

Moving on. I've give you a very simple recipe of an inventory website. All in a less than 100 lines of code [obviously, you can't launch into space with this. Oh wait, can you ?  ] ]

Install python and streamlit on your PC ( or server ), you can find installation instructions here. Take all your inventory in excel sheets. You can even convert it into .csv files, but if you don't, that's fine too. Write a .py file with the interaction you need. Look at the code for more details. Open a terminal and run 'streamlit run <filename>.py' and there you have it.

An inventory website being server on you LAN network. Just give everyone your IP address and port and they could use it too. No more writing code for the web server, html, css, javascript, angular boiler plate etc. One file, some python code and your inventory excel sheet or csv. This setup solves a simple problem I call visibility. Every one know the current state of inventory. All you have do is keep the excel sheet up to date and your users would see the latest inventory on the website automatically.

There are some problems it does not solve. For example, there's no real authentication. So don't use for external facing websites, obviously. Apart from that, you could create an excel sheet of user name and passwords and link it to your streamlit app to authenticate people.

Without further ado, you can find sample code here.

Call or text me if you need any help.

|

Today I learnt very simple trick to use version control across two folders to keep them in sync.

This is particularly useful if you shuffle your coding work between two devices and have been constantly copying changes from one to another. For example, I do most of my GPU heavy computation on a workstation but design and run EDA / cleaning scripts on my Mac. Another situation would be development on the local machine and pushing GPU heavy jobs to a HPC cluster, where you would have to constantly delete & replicate everything in the cluster memory.

To version control in case of personal project, you could you use GITHUB and for a project hosted on the Business Network , you could use git.ongc.co.in , but both of these were not applicable to my case.

So let's say have a project folder A on workstation and you want to keep it in sync with a project folder B on your removable media. These are the steps you need to perform

1. Initialise git in both A and B. -- `git init`

2. Create a new folder C and initialise a barebones version there. -- `git init --bare`

3. Set C as a remote repository of A. -- `cd A` , `git remote add <a name, example : C > <full path to C>

4. Set C as a remote repository of B. -- `cd B` , `git remote add <a name, example : C > <full path to C>

Now if you want to replicate changes of A into B, first you would have to make commits in A and push to C and then pull commits from C into B.

1. `cd A`, `git add .` , `git commit -m '<commit message>'`, `git push <name of remote > <branch name, ex : master>

2. `cd B` , git pull <name of remote > <branch name>

Similarly you can replicate the change made in B to A by doing the opposite -- committing and pushing from B and pulling in A.

In totality, all we are doing here is , we are removing a code hosting server like GITHUB from the picture and using a local repo C to play its role.

You can do this with multiple repositories , multiple branches etc.

Hope this helps someone.

Ref : https://stackoverflow.com/questions/6976459/push-git-project-to-local-directory

Modified on by Jayanth Boddu 02F71794-8F71-4622-6525-7BA70043E8EB Boddu_Jayanth@ongc.co.in

|

]

]