The Copyright Office, Government of India registered a Copyright (# SW-19009/2024) for the groundbreaking in-house software, SEISARC developed by our team at Processing Division, GEOPIC.

SEISARC is one of the first copyrighted innovations from the Seismic Processing team @ GEOPIC. This Python-based, multi-system automation tool is designed to simplify the archival and delivery of large-volume seismic processing projects. As exploration acreage expands and data resolution and diversity of processing deliverables increase, SEISARC ensures the integrity of seismic processing deliverables, optimizes resource utilization, and boosts productivity, giving our team more time to enhance imaging deliverables.

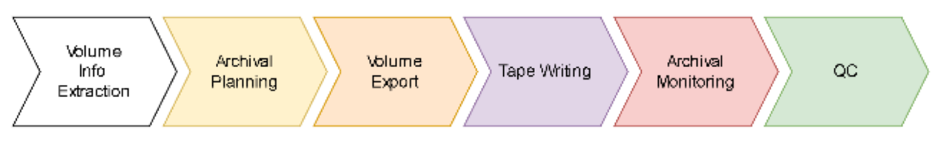

In large-volume projects, the data size exceeds the storage capacity of a single unit of tape media technology currently available. Consequently, the archival process involves partitioning the project volume into multiple SEGY files, each SEGY containing a subset of the overall project data, specifically data related to a range of inline (IL) lines. The IL range within each SEGY is determined based on the maximum capacity of the tape media. These SEGY files are initially exported to disk storage before being written onto tape media. To conserve shared disk space and compute resources, the volume export jobs are executed in phases. Each generated SEGY file is transferred from disk to tape using a Remote Tape Library (RTL) controlled tape drive. Following each tape write operation, a small subset of the written data is read back to disk and imported into the processing software for quality control (QC) of the archived data.

Currently, analysts manually manage data export/import jobs, RTL servers, and disk space. This manual intervention in repetitive tasks not only consumes valuable analysis time but also introduces the potential for human errors. To understand the scale of the problem, we could consider a typical marine seismic project covering 6000 sq. km with a fold of 50. The archival of this project requires approximately 350 TB of tapes for archival, involving around 84 export jobs and equivalent disk space. If the archival process were run continuously with four tape drives operating in parallel, it would take approximately 10 days, assuming each export job takes 5-6 hours and tape writing takes 3-4 hours per tape. Additionally, due to the use of different software and hardware systems for processing and archival, the archival process requires continual management of volume exports due to various constraints. These constraints include the upper limit on the number of data exports before exhausting the permitted disk space for the project and the need to maintain a minimum number of SEGY files ready for input buffering during tape writing to maximize the utilization of the available RTL infrastructure. Tape writing also involves multiple manual and sequential operations per tape, along with manual data dumps for archival QC and manual deletion of SEGY files to conserve disk space.

An ideal archival process is fully automated and integrates the different software (s/w) & hardware (h/w) components of archival. This automated pipeline efficiently handles the entire archival process from partitioning of the project volume into multiple IL ranges to exporting the corresponding SEGYs for each IL range. It effectively buffers export jobs and tape writes taking into account the limited disk space and tape writing hardware. It automates the hardware control operations of assigning-loading-writing-unloading multiple tape media. Furthermore, such a process offers visibility of the entire archival pipeline including real-time updates on the current status and the estimated time of completion of the entire archival pipeline. However, implementations of the aforementioned ideal archival process are not uniformly found across processing software currently in use. Consequently, this leads to sub-optimal utilization of resources, increased manual work, and elevated risk of human errors. Additionally, in most cases, the archival infrastructure is designed to meet the average demand rather than peak demand. Furthermore, the infrastructure is shared between multiple projects with varying timelines. Hence, there is a pressing need for accelerating the archival process and optimizing resource utilization.

Moreover, each phase of archival has a few technological gaps. For example, existing processing software lacks essential functionality such as estimating the memory footprint of each inline when exported as SEGY, close integration of the processing software with the tape writing server, etc.

Hence, the team framed the manual intervention required in each phase of archival as an automation problem needing the integration of multiple systems – the processing software, filesystem and Remote Tape Library. To address this challenge, and minimize the time and manual effort involved in seismic archival, they built a Python-based command line tool. This tool simplifies & accelerates each phase of the archival process, offering a streamlined solution to enhance efficiency and reduce manual intervention.

Introducing automation to each phase of the archival process increases productivity, optimizes resource utilization and reduces the scope of human error. This shift would allow for increased focus on improving processing output while reducing the time allocated to archival tasks. Additionally, it enables the efficient utilization of disk space, tape drives, and the processor's time. Automation helps mitigate errors which contribute to time and quality losses -- such as an export job or tape write failures, occurrences of "right data, wrong headers," and duplicate IL range writing.

This work has led to a multifold increase in speed and a decrease in project time spent on archival. So far, the archival of 03 internal project volumes (120 tapes) has been carried out via this tool. The benefits envisaged are summarized as follows - Automatic one-shot computation of IL ranges and memory footprint before the archival phase (the task is completed in time order of 10s of hours ), zero trial and error in splitting volume into IL ranges as per tape capacity ( in a few seconds ). Creation of a complete archival plan in one go with the details of export jobs and the number of tape cartridges needed for archival ( in a few seconds ). This tool eliminates the potential human error in populating textual headers per tape cartridge. (potentially saving 3-4 hours of rewriting per tape). Further, it also automates the handling of RTL tape drives needed to write multiple tapes (replaces 8 operations by just 1 operation, saving ~ 4 minutes per tape write). It improves visibility by providing real-time status of all tape drives and the progress of tape writing.

The production code related to this project can be accessed at https://git.ongc.co.in/125049/seisarc-prod. Interested readers may contact me for a detailed technical description of the solution.