Interesting article on cloud based automation........

A prototype for hosted cloud-based automation, monitoring & historian application that provides CNG Fueling/Marketing companies with the ability to monitor their assets, analyze production, and assist their customers (from their current positions) to locate and get maximum information for nearby CNG stations. Since this application is a web-based service, the only thing users need to start monitoring their field devices is an internet connection, a browser and a password. The prototype also includes a Mobile application that will run a on any smartphone or tablet device. (Read more.....)

Modified on by B SUBAIR D6159E5D-6D12-04AF-6525-6FE2003CEE31 subair_b@ongc.co.in

|

Automation Networks: From Pyramid to Pillar

One way of doing this is to use TSN edge switches to connect end devices to the TSN network. “Of course, determinism will depend on the capabilities of the end device,” said Kleineberg, “But having non-deterministic devices on the network won’t impede the deterministic ones.”

Another approach is to swap out existing protocol masters/controllers with TSN-capable ones. “This approach allows you to keep your end devices as they are,” Kleineberg said. “Using a TSN-capable protocol master/controller, you can port your automation protocols to be transported over TSN. The master synchronizes the protocol schedule with the TSN schedule and allows the automation protocol to be transported seamlessly over TSN. This allows a step-by-step approach to TSN for brownfield plants because they can keep all their existing, protocol-specific devices and just change out the masters. Once that’s done, the devices can be changed out one by one over time. In the meantime, by transporting the automation protocol through TSN, connectivity between old and new devices can be maintained.”

Lund pointed out that, even without having all TSN-capable devices on your network, the plant can still get distinct benefits from having a TSN network in place. Kleineberg explained the reason for this: “Even if the end devices are non-deterministic or not TSN capable, their communications will travel over the TSN network. As the communications from those devices merge onto the TSN network, they will be handled deterministically,” he said.

As exciting as the potential is for TSN, Kleineberg and Lund agree that the technology is currently overhyped. Despite that, both say that real industry interest in it is growing exponentially.

“TSN is currently just a Layer 2 technology; it needs the development of application protocols to advance further,” said Kleineberg. He admits that some pushback against TSN does exist from suppliers that prefer to use proprietary networks. “But the farther those suppliers get away from standard Ethernet, the harder it will be for them,” he said. “End users are very positive about developments like TSN that promise to bring standard networks to the plant floor.”

https://www.automationworld.com/automation-networks-pyramid-pillar

Modified on by B SUBAIR D6159E5D-6D12-04AF-6525-6FE2003CEE31 subair_b@ongc.co.in

|

A Japanese semiconductor plant shows how autonomous control based on artificial intelligence is becoming reality

As process control technologies advance, one concept gaining prominence is autonomy. When contrasted with conventional automation, one of the main differentiators of autonomy is applying artificial intelligence (AI) so an automation system can learn about the process and make its own operational improvements. Although many companies find this idea intriguing, there is understandable skepticism. The system’s capability is only as good as its foundational algorithms, and many potential users want to see AI in operation somewhere else before buying into the idea wholeheartedly. Those real-world examples are beginning to emerge.

Yokogawa’s autonomous control systems are built around factorial kernel dynamic policy programming (FKDPP), which is an AI reinforcement learning algorithm first developed as a joint project of Yokogawa and the Nara Institute of Science and Technology (NAIST) in 2018. Reinforcement learning techniques have been used successfully in computer games, but extending this methodology to process control has been challenging. It can take millions, or even billions, of trial-and-error cycles for a software program to fully learn a new task.

Since its introduction, FKDPP has been refined and improved for industrial automation systems, typically by working with plant simulation platforms used for operator training and other purposes. Yokogawa and two other companies created a simulation of a vinyl acetate manufacturing plant. The process called for modulating four valves based on input from nine sensors to maximize the volume of products produced, while conforming to quality and safety standards. FKDPP achieved optimized operation with only about 30 trial-and-error cycles—a significant achievement.

This project was presented at the IEEE International Conference in August 2018. By 2020, this technology was capable of controlling entire process manufacturing facilities, albeit on highly sophisticated simulators. So, the next question became, is FKDPP ready for the real world?

From simulation to reality

Figure 1. Yokogawa’s Komagane facility has complete semiconductor manufacturing capabilities, which must operate in clean room environments.

Yokogawa answered that question at its Komagane semiconductor plant in Miyada-mura, Japan (figure 1). Here, much of the production takes place in clean room environments under the tightly controlled temperature and humidity conditions necessary to produce defect-free products. The task of the AI system is to operate the heating, ventilation, and air conditioning (HVAC) systems optimally by maintaining required environmental conditions while minimizing energy use.

It is understandable that an actual application selected for this type of experiment would be of modest scale with minimal potential for safety risks. This conservative approach may be less dramatic than one at an oil refinery, but this does not reduce its validity as a proof of concept.

Figure 2. Semiconductor sensors manufactured in the Komagane plant go into differential pressure transmitters and must have exceptional accuracy and stability over time.

At first glance, operating an HVAC system autonomously might not seem complex. But the HVAC systems supporting the tightly controlled clean room environment account for 30 percent of the total energy consumed by the facility, and so represent a sizeable cost. Japan’s climate varies through the seasons, so there are adjustments necessary at different times of the year to balance heating and cooling, while providing humidity control.

The facility resides in a mountain valley at an elevation of 646 meters (2,119 feet). It has a temperate climate and tends to be relatively cool, with an annual temperature between –9° and 25°C (15.8° and 77°F). The plant produces semiconductor-based pressure sensors (figure 2) that go into the company’s DPharp pressure transmitter family, so maintaining uninterrupted production is essential. Even though this demonstration is at one of Yokogawa’s own plants, the cost and production risks are no less real than those of an external customer.

The facility’s location is outside the local natural gas distribution system, so liquified petroleum (LP) gas must be brought in to provide steam for heating and humidification. Air cooling runs on conventional grid-supplied electric power. Both systems work in concert as necessary to maintain critical humidity levels.

Complex energy distribution

Considerations surrounding energy use at Japanese manufacturing plants begin with the high domestic cost. Energy in all forms is expensive by global standards, and efficiency is paramount. The Komagane facility uses electric furnaces for silicon wafer processing, and it is necessary to recover as much waste heat as possible from these operations, particularly during winter months.

To be considered a success, the autonomous control system must balance numerous critical objectives, some of which are mutually exclusive. These objectives include:

- Strict temperature and humidity standards in the clean room environment must be maintained for the sake of product quality but with the lowest possible consumption of LP gas and electricity.

- Weather conditions can change significantly over a short span of time, requiring compensation.

- The clean room environment is very large, so there is a high degree of thermal inertia. Consequently, it can take a long time to change the temperature.

- Equipment in the clean room also contributes heat, but this cannot be regulated by the automated control system.

- Waste heat from electric furnaces is used as a heat source instead of LP gas, but the amount available is highly variable, driven by the number of production lines in use at any given time.

- Warmed boiler coolant is the primary heat source for external air. If more heat is necessary than is available from this recovered source, it must come from the boiler burning LP gas.

- Outside air gets heated or cooled based on the local temperature, typically between 3° and 28°C (37.4° and 82.4°F). For the greater part of the year, outside air requires heating.

The existing control strategy (figure 3) is more complex than it first appears. Below the surface, the mechanisms involved are interconnected in ways that have changed over the years, as plant engineers have worked to increase efficiency.

Figure 3. The HVAC system brings air in from outside nearly continuously to ensure adequate ventilation. The air must be conditioned to maintain tight temperature and humidity requirements in these critical manufacturing environments.

There have been numerous previous attempts to reduce LP gas consumption without making major new capital equipment investments. These incremental improvements reached their practical limits in 2019, which drove implementation of the new FKDPP-based control strategy in early 2020.

The implementation team selected a slow day during a scheduled production outage to commission the new control system. During that day, the AI system was allowed to do its own experimentation with the equipment to learn its characteristics. After about 20 iterations, the AI system had developed a process model capable of running the full HVAC system well enough to support actual production.

Over the weeks and months of 2020, the AI system continued to refine its model, making routine adjustments to accommodate changes of production volumes and seasonal temperature swings. The ultimate benefit of the new FKDPP-based system was a reduction of LP gas consumption of 3.6 percent after implementation in 2020, based entirely on the new AI strategy, with no major capital investment required.

FKDPP-based AI is one of the primary technologies supporting Yokogawa’s industrial automation to industrial autonomy (IA2IA) transition, complementing conventional proportional-integral-derivative and advanced process control concepts in many situations, and even replacing complex manual operations in other cases. Real-time control using reinforcement learning AI, as demonstrated here, is the next generation of control technology, and it can be used with virtually any manufacturing process to move it closer to fully autonomous operation.

Source:https://www.isa.org/intech-home/2022/october-2022/features/case-study-ai-based-autonomous-control

Modified on by B SUBAIR D6159E5D-6D12-04AF-6525-6FE2003CEE31 subair_b@ongc.co.in

|

A new digital twin proxy is set to transform operational efficiency

BY HASSANE KASSOUF, EMERSON AUTOMATION SOLUTIONS

Despite the recent oil price optimism, returning to pre-2013 operational tactics seems unlikely. The demands for digitalization and automation from oil and gas operators are rising at unprecedented speed. By 2020 well over 20 billion Internet of Things (IoT) devices will be connected, with more than 5 billion devices starting to use edge intelligence and computing to usher in the next phase of digital transformation.

Furthermore, volatile oil prices and super-fast drilling operations have become today’s new global normal. It is increasingly difficult to build E&P plans that can be operationally credible and still make financial sense a year or two into execution. A new emerging concept, a proxy digital twin, is starting to bridge this gap by virtually connecting services between all surface and subsurface components of the exploration-to-market value chain. Cloud-based connectivity between device sensors, combined with reservoir artificial intelligence (AI) capabilities that scan massive amounts of geological and historical production data using parallel computing, enables full-scale operational control and collaboration between the subsurface reservoir and across surface operations.

The digital twin impact is beginning to spread from manufacturing, where it initially helped prevent unplanned shutdowns by pinpointing root-cause problems in machinery, into what is called “connected services.” These, for example, use subsurface E&P software to provide data about failures in wells or optimization of production operations and flow farther down the chain.

So what is driving this evolution? Managers are making very high-risk decisions about future investment and development strategies at staggering costs. Conventional production operations are dated, expensive, time-consuming and uncollaborative, leveraging only a negligible fraction of data relative to the volumes collected. To provide some context, Emerson Automation Solutions’ research, in step with industry data, shows that more than 65% of projects greater than $1 billion fail, with companies exceeding their budgets by more than 25% or missing deadlines by more than 50%.

To reverse current industry trends of capital project cost and schedule overages, subsurface knowledge must be made available at the speed of surface operations. Today, digitalization is making that possible. Software will connect surface and subsurface technologies with automation and control systems, modeling and simulation systems, and human operations. The Industrial Internet of Things and AI are driving the speed and scale at which Big Data can be translated into actionable findings and reproduced across asset value chains. What is the outcome? Accelerated operations, increased recovery factor and minimized capex are all at reduced risk. Predictive analytics directly translates to operational and project efficiency.

How is it that industrial automation players can lead this transformation? They have a front-row presence in digital transformation initiatives of thousands of clients and their organizations. What Emerson has observed is that if a cross-organizational digital adoption culture is established in advance, then product and IT automation stands a chance. Once actionable data starts flowing through a connected Big Loop via an AI-enabled software infrastructure, more holistic questions about how to improve the bottom-line business value will emerge. Patterns will start to surface from the activity. Oil and gas users will be encouraged to ask questions like: where exactly will maintenance be planned in the hydrocarbon flow process from the reservoir to the well to the pipeline to improve HSE practices, or does it make sense to build a predictive model for data flowing from sensors to allocate the right equipment? That is when and where breakthroughs will happen. Insightful questions can be asked, not just about today’s problems, but about future problems as well.

By leveraging predictive analytics, machine learning and IoT-connected sensors, it is possible to improve E&P operations by intelligently using greater masses of data, including seismic, production and historical drilling, to help validate and perpetually refine predictive reservoir models. Reservoir-driven digital collaboration also optimizes production and flow from upstream into midstream and downstream operations, eliminating the remaining area of pervasive inefficiencies.

Related articles

|

Digital Transformation in Oil & Gas

-

Being an Electronics and Instrumentation Engineer, I have a keen interest in Latest Digital Technologies that can make our lives easier. This article is a humble attempt to summarise the information I have been able to gather with my knowledge hunt. Thus comes this short article, "Digital Transformation in Oil & Gas" that is aimed to give the reader an impression about the Digital Technologies in Oil & Gas that can be helpful to take some digital initiatives in our own Organisation.

-

From my side, I have developed a small application which is Instruments/Spare List Effective Management using Visual Basic Excel and I have named it "Instrumentation Management System (IMS)". One can download the following working steps file to get an idea: IMS Steps.mht. In case anyone is interested I will also share my "IMS" prototype which can be useful for now to maintain the Instruments/Spare List Effectively, Print Particular Instrument/Spare Details with a single-click and Identify the Non-Available(Quantity =0) Instrument/Spare List so that we can identify the Non-Available Spare parts earlier and procure them on-time. Maintenance Management is yet to be developed as it is difficult to develop a robust maintenance management system using Visual Basic by including Preventive Maintenance details, Generating reports like Calibration Report, Job Completion Report etc,.. and work orders etc,.. but hopefully I will try to develop it in future. Now coming to the Digital Transformation in Oil and Gas......

· Preface:

-

Recently, ONGC published "Energy Strategy 2040" Report and according to the report, ONGC has a greater vision in investing over "Digital Transformation" by establishing a "Digital Centre of Excellence (DCoE)" under "Renewables, Digital and Technology (RDT)" Team headed by Director (RDT).

-

The DCoE will be responible for:

-

Defining and driving the digital agenda

-

Supervising execution and monitoring performance of digital initiatives

-

Building long term capability within ONGC

-

Building and managing ONGC's strategic partnerships in digital

-

Once DCoE is implemented according to the road map 2040 then ONGC can join the pool of Exploration and Production Companies which are focusing on implementing twelve underlying technology drivers that are trying to reshape the E&P Industry by enabling them to achieve three key strategic objectives: increased production, reduced costs, and enhanced efficiency.

-

Big-data and analytics

-

Cloud computing and storage

-

Collaborative technology platforms

-

Real-time communication and tracking

-

Mobile connectivity and augmented reality

-

Sensors

-

3D scanning

-

Virtual reality

-

Additive manufacturing

-

Unmanned aerial vehicles

-

Robotics and automation

-

Cyber-security and block chain

-

Digital Oil Field & The Great Crew Change

-

Oil and gas (O&G) companies are currently experiencing major disruption. It’s becoming increasingly difficult to find new sources of oil. As a result, exploration and development investments are progressively made in remote and environmentally sensitive areas, adding significant cost and complexity to capital projects. More stringent regulations are increasing constraints on the business. At the same time a generation of experienced employees are retiring from the industry workforce. Exacerbating the current environment is the precipitous drop in oil price, putting the entire industry under pressure.

-

Persistent volatility and production oversupply in global energy markets over the past three years, and the resulting significant and sustained depression of oil prices, have profoundly impacted the entire O&G ecosystem. To thrive in both the current market conditions and the projected future low carbon economy, it will not be enough for most O&G companies to simply enhance the way they currently operate.

-

Today’s market and industry outlook are forcing O&G companies to re-examine core business capabilities, and explore new ways to execute business strategies in a dynamic and volatile marketplace. Whether large or small, national or international, digitalization of key operational workflows in O&G companies will be critical for success in the years to come.

-

Its is estimated that the most of the cumulative experience and knowledge will be lost to the oil and gas industry in the next decade due to huge retirement of engineers in petroleum industries.

-

Therefore, Integrating high-tech systems and digital technologies in the Oil & Gas Industry will improve the efficiency and production.

1. Introduction :Key features needed for Operational Excellence in the Oil and Gas Industry

2. Digital Transformation

The end goal of company is to drive digital transformation across the entire operational life cycle to achieve the following factors:

-

Improved labor utilization

-

Operational Efficiency

-

Simplicity - Speed of execution and processing

-

Early Failure warning savings, particularly related to shutdowns

-

Increased asset availability in multi-use & multi-purpose facilities

-

Reduced maintenance costs

-

Better adherence to compliance requirements

-

Reduced unplanned downtime

-

Improved asset utilization

- Smart Oil Field

-

Real-Time Data

-

Integrated Production Models

-

Integrated Visualization

-

Intelligent Alarming & Diagnostics

-

Enhanced Business Processes & Procedures

3. IoT & IIoT

-

IoT - Internet of Things

-

Internetworking of physical devices, vehicles, buildings and other things

-

IoT is about consumer-level devices with low risk impact when failure happens.

-

IIoT - Industrial Internet of Things

-

IIoT uses more sophisticated devices to extend existing manufacturing and supply chain monitor to provide broader, more detailed visibility and enable automated controls and accomplished analytics.

-

IIoT connects critical machines and sensors in high-stakes industries such as Aerospace, Defence, Healthcare and Energy.

-

Industry 4.0 is the trend towards automation and data exchange in manufacturing technologies and processes which include cyber-physical systems (CPS), the internet of things (IoT), industrial internet of things (IIoT), cloud computing, cognitive computing and artificial intelligence.

-

IIoT Technologies

-

RFID Technology

-

Logistics and Supply

-

Manufacturing

-

Transportation

-

Well Performance - Emerson Process Management

-

Augmented Reality

-

Edge Technology

-

Machine Learning - Predictive Analytics

-

Effective Asset Monitoring

-

Computerised Maintenance Management System

-

Enterprise Asset Management

-

Applications of IIoT Technologies implemented

-

On a larger scale, businesses that implement IoT can also unlock these benefits:

-

Asset Tracking. The ability to know where each item is at any moment of time

-

Real-time Inventory Management. The ability to control the asset flow in real-time and use real-time information for strategic purposes.

-

Risk prevention/Data privacy. IoT can help ensure better risk management and data security

-

Digital Upstream – Following fields can be digitalised

-

Essential Characteristics of Smart Oil Field

-

IIoT

-

Big Data & Analytics

-

Artificial Intelligence

-

Mobile and Cloud

-

Network and Security

-

Applications of Smart Oil Field

-

Using real-time information for delivering safe, reliable and efficient work, well construction company BP is investing in Big Data & AI

-

JATONTEC has successfully conducted initial oil field trial in two major oil fields in China. Deployments are expected to ramp up quickly in the near future.

-

Technologies Implemented

-

DIGITAL TWIN - digital replica of a living or non-living physical entity. By bridging the physical and the virtual world, data is transmitted seamlessly allowing the virtual entity to exist simultaneously with the physical entity.

-

Organisations implementing Digital Twin Applications/solutions

4. Predictive Analytics and Maintenance

Predictive analytics has the potential to revolutionize the operations of an oil and gas enterprise - in upstream, midstream, and downstream. Areas that can benefit from rapid enaction on real-time insights include:

-

Maintenance and optimization of assets,

-

Product optimization,

-

Risk management and HS&E,

-

Storage and logistics,

-

Market analysis and effectiveness.

-

Schneider Electric is just reading the current and doing the IOT for predicting the failure of motors etc,...

-

A computerized maintenance management system or CMMS is a software that centralizes maintenance information and facilitates the processes of maintenance operations. It helps optimize the utilization and availability of physical equipments like vehicles, machinery, communications, plant infrastructures and other assets. Also referred to as CMMIS or Computerized Maintenance Management Information System, CMMS systems are found in manufacturing, oil and gas production, power generation, construction, transportation and other industries where physical infrastructure is critical. A CMMS focuses on maintenance, while an Enterprise Asset Management (EAM) system takes a comprehensive approach, incorporating multiple business functions. A CMMS starts tracking after an asset has been purchased and installed, while an EAM system can track the whole asset lifecycle, starting with design and installation.

-

CMMS plays a critical role in delivering reliable uptime by providing:

-

Asset visibility: Centralized information in the CMMS database enables maintenance managers and teams to almost instantly call up when an asset was purchased, when maintenance was performed, frequency of breakdowns, parts used, efficiency ratings and more.

-

Workflow visibility: Dashboards and visualizations can be tuned to technician and other roles to assess status and progress virtually in real time. Maintenance teams can rapidly discover where an asset is, what it needs, who should work on it and when.

-

Automation: Automating manual tasks such as ordering parts, replenishing MRO inventory, scheduling shifts, compiling information for audits and other administrative duties helps save time, reduce errors, improve productivity and focus teams on maintenance — not administrative — tasks.

-

Streamlined processes: Work orders can be viewed and tracked by all parties involved. Details can be shared across mobile devices to coordinate work in the field with operational centers. Material and resource distribution and utilization can be prioritized and optimized.

-

Managing field workforces: Managing internal and external field workforces can be complex and costly. CMMS and EAM capabilities can unify and cost-effectively deploy internal teams and external partnerships. The latest EAM solutions offer advances in connectivity, mobility, augmented reality and blockchain to transform operations in the field.

-

Preventive maintenance: CMMS data enables maintenance operations to move from a reactive to a proactive approach. Data derived from daily activities as well as sensors, meters and other IoT instrumentation can deliver insights into processes and assets, inform preventive measures and trigger alerts before assets fail or underperform.

-

Consistency and knowledge transfer: Documentation, repair manuals and media capturing maintenance procedures can be stored in CMMS and associated with corresponding assets. Capturing and maintaining this knowledge creates consistent procedures and workmanship. It also preserves that knowledge to be transferred to new technicians, rather than walking out the door with departing personnel.

-

Compliance management: Compliance audits can be disruptive to maintenance operations and asset-intensive businesses as a whole. CMMS data makes an audit exponentially easier by generating responses and reports tailored to an audit’s demands.

-

Health, safety and environment: In line with compliance management, CMMS and EAM offer central reporting for safety, health and environmental concerns. The objectives are to reduce risk and maintain a safe operating environment. CMMS and EAM can provide investigations to analyze recurring incidents or defects, incident and corrective action traceability, and process change management.

-

Resource and labor management: Track available employees and equipment certifications. Assign specific tasks and assemble crews. Organize shifts and manage pay rates.

-

Asset registry: Store, access and share asset information such as:

-

Manufacturer, model, serial number and equipment class and type

-

Associated costs and codes

-

Location and position

-

Performance and downtime statistics

-

Associated documentation, video and images such as repair manuals, safety procedures and warranties

-

Availability of meters, sensors and Internet of Things (IoT) instrumentation

-

Work order management: Typically viewed as the main function of CMMS, work order management includes information such as:

-

Work order number

-

Description and priority

-

Order type (repair, replace, scheduled)

-

Cause and remedy codes

-

Personnel assigned and materials used

-

Work order management also includes capabilities to:

-

Automate work order generation

-

Reserve materials and equipment

-

Schedule and assign employees, crews and shifts

-

Review status and track downtime

-

Record planned and actual costs

-

Attach associated documentation, repair and safety media

-

Some of the best CMMS Softwares

5. Big Data Analytics and Artificial Intelligence

-

AI has already been incorporated into a number of sectors within the oil and gas industry as part of global efforts to digitally transform exploration and production operations. But what is the future of AI technology in the oil and gas industry?

-

The industry seems to have readily accepted digital technologies such as AI, and is optimistic about the potential of this technology.

-

Aker BP Improvement senior vice president Per Harald Kongelf said: “The oil and gas industry is facing a rapidly changing digital landscape that requires cutting-edge technologies to cultivate growth and success.”

-

IBM senior manager Brian Gaucher said: “Cognitive environments and technologies can bring decision makers together, help them seamlessly share insights, bring in heterogeneous data sets more fluidly, and enable target analysis and simulation.”

-

AI Applications

-

HR

-

Finance

-

Maintenance and Capital Asset management

-

Predictive analysis to get insights for better performance

-

Preventative Maintenance Programs

-

Capital Planning

-

Sustainable environment development

-

Case: Biodentify - DNA analysis to find oil

-

AI - Drilling Performance Optimization

-

Hole condition Avoidance of stuck pipe

-

Mud Flow IN/OUT Prediction for NPT

-

Drill bit selection

-

Drilling process analysis and breakdown operations

-

Digitalization & AI in logging analysis

-

AI - Production Optimizations

-

Gas Lift Optimizations

-

Applied Digitalization & AI - Assets (Asset Integrity)

-

Equipment problems follow a certain course of action able to spot with ML

-

Safety Valve Monitoring

-

Seismic Sections Images to SegY

-

Salt Prediction and Seismic Inversion

-

Ground water monitoring

-

Fuel Price Prediction using AI

-

Applications of Artificial Intelligence adopted by some organisations:

-

In January 2019, BP invested in Houston-based technology start-up Belmont Technology to bolster the company’s AI capabilities, developing a cloud-based geoscience platform nicknamed “Sandy.”

-

Sandy allows BP to interpret geology, geophysics, historic and reservoir project information, creating unique “knowledge-graphs.”

-

The AI intuitively links information together, identifying new connections and workflows, and uses these to create a robust image of BP’s subsurface assets. The oil company can then consult the data in the knowledge-graph, with the AI using neural networks to perform simulations and interpret results.

-

In March 2019, Aker Solutions partnered with tech company SparkCognition to enhance AI applications in its ‘Cognitive Operation’ initiative.

-

SparkCognition’s AI systems will be used in an analytics solution platform called SparkPredict, which monitors topside and subsea installations for more than 30 offshore structures.

-

The SparkPredict platform uses machine learning algorithms to analyse sensor data, which enables the company to identify suboptimal operations and impending failures before they occur.

-

Shell adopted similar AI software in September 2018, when it partnered with Microsoft to incorporate the Azure C3 Internet of Things software platform into its offshore operations.

-

Baker Hughes, GE Company and C3.ai Announce Joint Venture to Deliver AI Solutions Across the Oil and Gas Industries

-

Big Data Analytics:

-

Data Analytics and Visualization using Tableau

-

With Tableau, you can analyze the data for value that makes you more than a commodity, whether it’s increasing output, reducing downtime, or improving customer service. With an efficient platform for gaining insights across geographies, products, services, and sectors, you can maximize downstream profits and minimize upstream costs. You can also help clients, partners, investors, and the public understand and visualize your value with interactive online dashboards.

-

With Tableau, ExxonMobil has empowered customers to get the answers they need themselves, improved data quality for work in the Gulf of Mexico, and saved a remarkable amount of time—in some cases, up to 95%. ExxonMobil was also building a culture of safety using Tableau

-

Getting ahead of the metrics with data visualizations at GE Oil & Gas

-

Cleaned up dashboards and business quality metrics for reporting

-

Increased data accuracy by 90% compared to previous years

-

Grown reporting and data visualization usage across the business

-

Benefits of Adopting Big Data in The Oil Industry

-

According to Mark P. Mills, a senior fellow at the Manhattan Institute, “Bringing analytics to bear on the complexities of shale geology, geophysics, stimulation, and operations to optimize the production process would potentially double the number of effective stages, thereby doubling output per well and cutting the cost of oil in half.”

-

A tech-driven oil field is already expected to tap into 125 billion barrels of oil and this trend may affect the 20,000 companies that are associated with the oil business. Hence, in order to gain competitive advantage, almost all of them will require data analytics to integrate technology throughout the oil and gas lifecycle.

6. Conclusion

-

Earl Crochet , Director of Engineering and Operational Optimization, Kinder Morgan said on OPEX Online 2019 conference speaker is that “In-house expertise and third-party expertise, in an ideal situation, should work together. In-house expertise should primarily manage the day-to-day, and the basic design should be supported by ongoing continual support in-house. A lot of this becomes tribal knowledge. However, you may need to rely on third-party expertise to fill in gaps in your knowledge, to help during a transition, or to deal with topics that are too technical and specific to require a full-time in-house professional.”

-

Oil and gas companies need to become predictive using technologies that can help them to be aware of what will occur so that they can increase asset availability by:

-

Monitoring, to know what is happening right now by gaining a near-real time view of process and asset status.

-

Becoming prescriptive to understand what should happen. This will allow companies to clearly look at the options and make decisions that will optimize operations. - Prescriptive Analytics

-

Becoming descriptive to understand and be able to explain up through management what happened and share these insights with the organization to help make informed decisions.

-

Today, digital is a key enabler in oil and gas to reduce costs, make faster and better decisions, and to increase workforce productivity.

-

Last but not the least, there was one question by Yuval Noah Harari that “As Computer becomes better and better in more and more fields, there is a distinct possibility that computers will out-perform us in most tasks and will make humans redundant. And then the Big Political and Economic question of the 21st century will be, ‘What do we need Humans for?’ or atleast ‘What do we need so many Humans for?’ ”

By Upendra Chokka

AEE (Instrumentation)

Rajasthan Kutch-Onland Exploratory Asset

ONGC Jodhpur

|

Digital Twin Spawns Automation Efficiencies

By Beth Stackpole , Automation World Contributing Writer, on June 6, 2017

At Rockwell Automation, the whole premise of the digital twin is to remove the need for the physical asset, whether it’s to test the actual hardware or control systems, notes Andy Stump, business manager for the company’s design software portfolio. Rockwell’s Studio 5000 Logix Emulate software enables users to validate, test and optimize application code independent of physical hardware while also allowing connectivity to third-party simulation and operator training systems to help teams simulate processes and train operators in a virtual environment.

In this context, a digital twin can be employed to provide a safer, more contextualized training environment that focuses on situational experience. “It helps with emergency situations, starting up and shutting down—things you don’t encounter ever day,” Stump explains.

A digital twin of a control system created in the Logix Emulate tool could also be tapped for throughput analysis, Stump adds, ensuring, for example, that a packaging machine could handle a new form factor without having to actually bring down the machine to test the new design. “Any time you take a machine out of production, it’s expensive,” he says. “If you can estimate that a machine is going to be down 60 percent of the time running what-if scenarios in a digital twin, there’s a lot of money to be saved.”

Moving forward, Rockwell will leverage new technologies such as virtual reality (VR) and augmented reality (AR) to enhance its vision for a digital twin. At the Hannover Fair in April, the company demonstrated a next-generation, mixed-reality virtual design experience using its Studio 5000 development environment with the Microsoft HoloLens VR headset.

For Siemens, the concept of a digital twin straddles both product design and production. In a production capacity, the digital twin exists as a common database of everything in a physical plant—instrument data, logic diagrams, piping, among other sources—along with simulation capabilities that can support use cases like virtual commissioning and operator training. Comos, Siemens’ platform for mapping out a plant lifecycle on a single data platform, and Simit, simulation software used for system validation and operator training, now have tighter integration to support more efficient plant engineering and shorter commissioning phases, says Doug Ortiz, process automation simulation expert for Siemens. In addition, Comos Walkinside 3D Virtual Reality Viewer, now with connectivity to the Oculus Rift Virtual Reality 3D glasses, enables a more immersive experience, allowing plant personnel to engage in realistic training and virtual commissioning exercises, he says.

“Customers want to get plants from the design stage to up and running in the shortest period of time and these tools are paramount for that,” Ortiz says. “The digital twin is great to use for any plant for the lifecycle of that unique plant.”

Improved maintenance opportunities

While most companies in the automation space are settling in with the digital twin for roles in operator training, virtual commissioning and optimization, there is still not a lot of activity leveraging the concept for predictive and preventive maintenance opportunities. The exception might be GE Digital, which is clearly pushing this use case as its long-term vision.

GE Digital sees four stages of analytics that will be impacted by digital twin and IoT:

- Descriptive, which tells plant operators information about what’s happening on a system like temperature or machine speed.

- Diagnostics, which might provide some context for why a pump is running at over speed, for example.

- Predictive, which uses machine learning, simulation and real-time and historical data to alert operators to potential failures.

- Prescriptive, which will advise operators about a specific course of action.

GE Digital showed off a digital twin representation of a steam turbine to showcase what is possible in the areas of predictive and prescriptive maintenance at its Minds + Machines conference last November.

“A digital twin is a living model that drives a business outcome, and this model gets real-time operational and environmental data and constantly updates itself,” said Colin J. Parris, vice president of software research at GE Global’s research center, during the presentation. “It can predict failures…reduce maintenance costs and unplanned outages, and…optimize and provide mitigation of events when we have these types of failures.”

Though the digital twin is certainly making headway in production, it’s still in its early days. “Digital twin is definitely hot right now, but it really depends on what the customer is trying to achieve and what they are trying to model,” says Bryan Siafakas, marketing manager in Rockwell Automation’s controller and visualization business, adding that it’s just a matter of time. “There is a huge upside in terms of productivity savings and shortened development cycles.”

https://www.automationworld.com/article/topics/industrial-internet-things/digital-twin-spawns-automation-efficiencies

|

First cyber attack on unguarded SIS reported

Jan 16, 2018

One of the first—if not the first—documented cyber attacks on a safety instrumented system (SIS) was reported on Dec. 14 by the FireEye and Dragos blogs, which each released reports about the incident. Their news was acknowledged the same day by Schneider Electric, which supplied the equipment and software affected.

Named either Triton, Trisis or HatMan, this malware reportedly gained remote access to an SIS engineering workstation running Schneider Electric's Triconex safety system at a critical infrastructure facility in the Middle East, and sought to reprogram its SIS controllers. Though the malware doesn't use any inherent vulnerability in Schneider Electric's devices, the malware's intrusion was possible because the user's equipment had allegedly been left in "program" mode, instead of being switched to "run" mode that wouldn't have allowed reprogramming. However, a subsequent mistake by the malware was detected by the SIS, which triggered a safe shutdown of the application.

"It appears the attacker had access to the safety control system and developed its malware over several weeks," says Andrew Kling, director of cybersecurity and architecture at Schneider Electric. "On Aug. 4, there was a mistake in the malware that was picked up by the Tricon equipment. As a result, the safety instrumented system took the process to a safe state and tripped the plant."

Kling reports that Schneider Electric learned its customer's safety system was affected within a few hours, and has been investigating it along with the user's security team, FireEye, and the U.S. Dept. of Homeland Security (DHS). Reports of the incident went mainstream when FireEye and Dragos posted news about it on Dec. 14 on their blogs.

"It's likely a plant shutdown wasn't the intended result of the malware," explains Kling. "It’s clear that this was a specific attack against a specific site, and not viral or weaponized. Had the plant not tripped, it’s likely the malware would have gone unnoticed.”

Consequently, Kling stressed it's important for process control users not to overreact in response to the Triton attack, and instead make sure their cybersecurity programs are up to date and sufficient to meet existing threats. "As reported by FireEye and Dragos, a series of missteps by the Triconex user enabled this attack to happen," says Kling. "For example, the SIS was connected to the network demilitarized zone (DMZ) at the enterprise level, and that enabled access to the SIS. The attackers were able to break into a Microsoft Windows workstation where Triconex and the SIS model were located, and probably most significantly, the Tricon’s memory protection functions (a physical key on the front panel) had been left in program mode, which allowed the Tricon’s memory to be altered by the attackers."

Kling describes Schneider Electric's short-, medium- and long-term mitigation strategies for cybersecurity incidents:

• Short-term consists of recommending that users follow standard and documented security practices, which include putting memory switches on the front of their Tricon units in run mode when not actively being programmed, which will protect their memory from intentioanl or accidental writes, and then removing and securing the key.

• Medium-term advice is that users consider doing a site assessment, and develop an updated cybersecurity plan. Kling states, “The security plan should be part of any company’s risk management plan. A regular review of the site and plan is a best practice.”

• Long-term involves Schneider Electric and its users jointly working with DHS and other security professionals to develop more and better protections against these and other cybersecurity threats and attacks.

"The problems highlighted by this one incident are not peculiar to Triconex and represent an opportunity for the industry to reflect upon the strategies we use to protect our plants,” explains Kling. "We're not trying to sweep any of this under the rug. This is a very public effort, and we want open communications to get information out to everyone, so the whole cybersecurity community can help solve these challenges.”

Control global article

|

|

Modified on by B SUBAIR D6159E5D-6D12-04AF-6525-6FE2003CEE31 subair_b@ongc.co.in

|

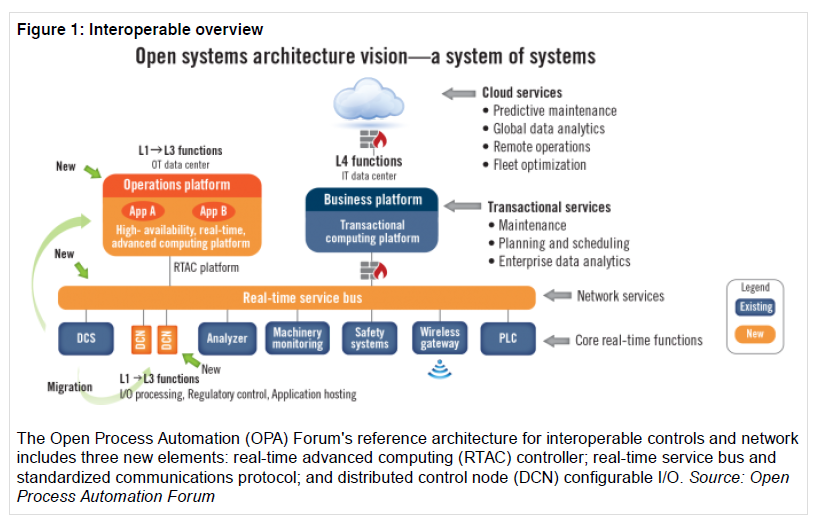

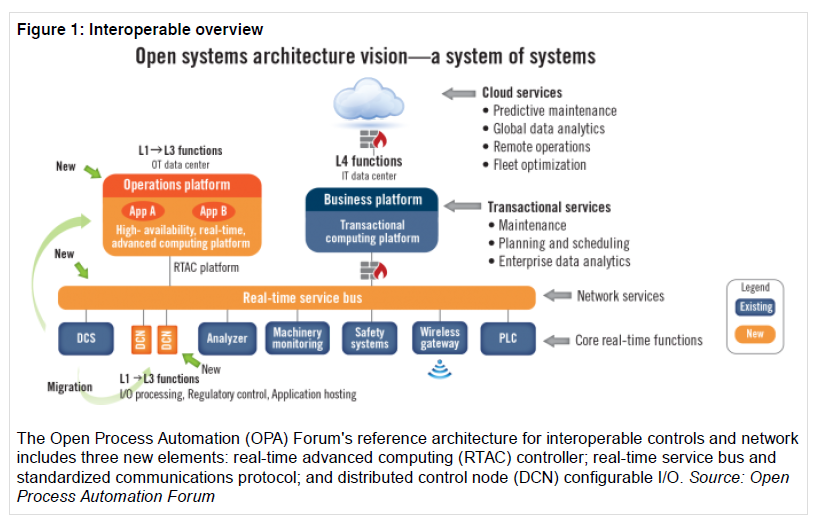

How oil companies lead charge to open, secure, interoperable process control systems

In the process industries, end users face many similar forces: endoflife and obsolete equipment and facilities, increasingly mega and complex projects with evertightening deadlines, and unfortunately, some control suppliers unwilling to provide interoperable components and networking. Users have coped with these occupational hazards for decades, of course, but now they're compounded by tightening margins due to reduced energy prices from fracking and plentiful supplies of natural gas and oil.

“A lot of our systems are becoming obsolete, and we need to replace them and continue to add value. Traditional DCSs weren't solving our business problems, so in 2010, we began an R&D program and in 2014, we developed functional characteristics we could take to the process industry,” says Don Bartusiak, chief process control engineer at ExxonMobil Research and Engineering Co. (EMRE). “Our vision is a standards based, open, secure, interoperable process automation architecture, and we want to have instances of the system available for on-process use by 2021.”

Breaking the interoperability barrier.pdf|View Details Breaking the interoperability barrier.pdf|View Details

Modified on by B SUBAIR D6159E5D-6D12-04AF-6525-6FE2003CEE31 subair_b@ongc.co.in

|

IIoT Technology Trends to Watch for in 2017Recommendations

End users and OEMs alike should embrace, rather than resist, the positive disruptive change that IIoT brings. IIoT’s initial focus should be on asset management and avoiding downtime. Automation suppliers must help their customers calculate the ROI justification needed to invest in these new IIoT solutions. Legacy assets must remain a part of, and be integrated into these latest IIoT technology solutions, wherever possible. All in all, these trends and changes make this a very exciting time to be in the automation space, and the future is likely to be even brighter.

|

Breaking the interoperability barrier.pdf

Breaking the interoperability barrier.pdf